Executive Summary

Search engines rank pages based on what they understand a page represents. When entities are clear, pages are easier to classify, new content inherits existing signals, and links reinforce established authority instead of starting from zero. The result is more stable rankings and authority that compounds across related searches.

Key Terms Relating To Entity SEO

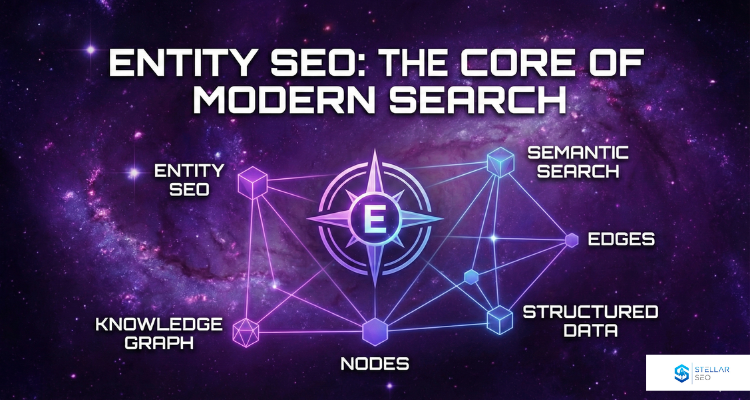

The following terms define how search engines interpret entities and relationships.

Entity

A singular, well-defined concept that can be uniquely identified. Entities include people, companies, locations, products, organizations, and specific models. In search, entities are treated as distinct “things,” not words.

Semantic Search

A search approach that evaluates meaning rather than exact wording. Semantic search focuses on intent, context, and relationships between entities instead of matching individual terms.

Knowledge Graph

Google’s internal system for storing entities and the factual relationships between them. The Knowledge Graph allows Google to connect people, brands, products, and concepts across queries, pages, and data sources.

Nodes and Edges

Structural components of the Knowledge Graph. A node represents an entity. An edge represents the relationship between two entities, such as ownership, location, or service provided.

Salience Scoring

A weighting process used to determine how central an entity is within a piece of content. Entities with higher salience are treated as the primary focus of the page rather than supporting references.

Semantic Triple

A basic unit of stored knowledge consisting of a subject, predicate, and object. For example, a company providing a service forms a factual relationship that search systems can store and verify.

Attribute

A specific property used to describe an entity. Attributes include details such as location, founder, industry, services, or product specifications.

Disambiguation

The process search engines use to determine which entity a term refers to when multiple entities share the same name or label. Disambiguation relies on context, related entities, and user intent signals.

For a more technical breakdown of the terminology used by modern algorithms, see our comprehensive AI LLM entity SEO glossary.

What Is Entity SEO?

Entity SEO determines how search engines classify and compare pages before ranking signals are applied. Search engines rank pages after identifying which entities the page represents and verifying those entities across other sources.

This is why some sites outperform competitors with more backlinks, more pages, and heavier on-page SEO. Search engines decide relevance first. Traditional ranking signals are applied afterward.

When entity signals are missing, pages are evaluated independently. When they are present, pages are evaluated as part of an existing topic and competitive set. This allows search engines to evaluate a page within an existing topic and competitive set.

From Semantic Search to Google’s Knowledge Graph

Google shifted search toward semantic understanding by building the Google Knowledge Graph. That system stores entities, their attributes, and how they relate to one another.

This is why search results changed. Knowledge panels exist because Google recognizes specific entities. Google Discover surfaces content based on the entities’ established topical relevance.

A search term can refer to more than one entity. User intent determines which one applies. Search engines resolve this using natural language processing and entity identification before rankings are calculated.

Weak entity identification leads to inconsistent results, while clear identification stabilizes relevance.

How Search Engines Use Entities at Query Time

Keyword research defines how queries are phrased, not how they are interpreted.

When a user submits a query, search engines first attempt to identify which entity or entities the query refers to. Only after that do they evaluate pages.

Pages are not ranked because they contain a phrase. They are ranked because they align with the inferred entity and its related entities. Search engines look for consistency across content, internal links, and structured data to confirm that alignment.

Entity-based SEO works by narrowing how a page can be interpreted. A page that clearly establishes its primary entity, supports it with related entities, and maintains the same context throughout gives search engines fewer assumptions to make.

Clear entity signals allow search systems to select pages without relying on probabilistic assumptions. Rankings improve because the meaning is consistent.

Entity SEO and Contextual Meaning

On page SEO exists to remove ambiguity about what a page represents to search engines. Adding unique attributes to a topic is a powerful signal for search engines. This is the core of what information gain in SEO is: providing new, verifiable facts that help the Knowledge Graph expand its understanding of a subject.

Each page should center on a single primary entity. Supporting entities should belong to the same topic and appear in contexts that make their relationship clear. When those relationships are consistent across the page, search engines can identify what the content represents without relying on inference.

Entity optimization comes from showing how concepts connect, not from repeating a search term. Pages that reflect real-world relationships between ideas are easier for search engines to classify and retrieve. Pages built around keywords alone lack enough context for reliable classification.

When the entity and its supporting context are clear, search engines like Google can place the page correctly within the topic and return it for relevant searches without additional signals.

Structured Data, Schema Markup, and Knowledge Panels

Structured data is used to make entity information explicit. Schema markup gives search engines direct signals about what a page represents instead of forcing them to infer meaning from text alone.

When schema is implemented correctly, it clarifies the primary entity of a page and shows how that entity relates to services, locations, and topics referenced elsewhere on the site. This makes entity identification more reliable across web pages, especially when similar terms or overlapping concepts exist.

Search engines do not use structured data as a ranking shortcut. They use it to confirm information already discovered through crawling and natural language processing. Schema does not replace content. It verifies it.

For businesses, this directly affects visibility in features like the Google Knowledge Panel, Google Business Profile, and Google Maps. When entity signals align across schema markup, on-page content, and third-party references, search engines can trust the entity data. Consistent entity data improves eligibility for enhanced results and reduces classification volatility.

Entity Relationships and Topical Authority

Topical authority develops when a site covers a subject through the full set of entities that define it. Publishing more pages does not create authority on its own. Authority comes from showing search engines that the site understands how the topic is structured.

Search engines evaluate whether a site sits at the center of a topic or on the edge by examining entity relationships. A site that consistently references the same core entities, supporting entities, and concepts in the correct context signals depth. Over time, that consistency separates authoritative sources from sites that only touch the surface.

This shifts how content strategy works. Keyword lists are not enough. Entity research is required to understand which entities define the topic, which entities shape the surrounding ecosystem, and which abstract concepts influence search intent. The objective is a consistent representation of how entities within the topic relate to one another.

When content reflects how entities relate to each other in practice, search engines can classify the site as a reliable source for the subject rather than a collection of loosely related pages. To bridge the gap between abstract authority and measurable rankings, we developed the entity-driven link building system. This framework ensures every placement reinforces your brand’s core identity while passing link equity.

Entity SEO as the Core SEO Strategy

Entity SEO allows new pages to inherit existing classification signals instead of starting from zero.

Search engines use entity recognition to understand what users are searching for and which pages belong in those results. When entities are clear, pages are evaluated as part of a larger whole instead of on their own. That applies across regular search results, Google Discover, and AI-driven search surfaces.

This reduces the amount of new evidence required for pages to rank. Once a search engine understands an entity well, it is easier to place new pages. Links support something that already exists instead of trying to introduce it. Fewer new signals are required because the entity has already been classified.

Sites structured this way build a position that search engines can recognize and reuse across related searches. Understanding these relationships is the foundation of how Google really ranks sites in 2026. Search engines have moved beyond simple keyword matching to a model based on factual verification.

Frequently Asked Questions

What is the difference between a keyword and an entity?

A keyword is a specific string of characters. An entity is the “thing” behind those characters. For example, “Top Gun” is a keyword. Depending on the context, the entity could be a 1986 movie, a 38-foot Cigarette boat, or a US Navy strike fighter tactics program. Entity SEO provides search engines with the attributes and relationships needed to identify exactly which one you are talking about.

Does Entity SEO replace traditional keyword research?

No. It evolves it. Traditional research tells you what people are typing. Entity research tells you what they are looking for and which related concepts you must cover to be considered an authority. If you optimize for the keyword “link building” but fail to mention related entities like “backlinks,” “anchor text,” or “referring domains,” search engines will have lower confidence in your topical depth.

How does Entity SEO change the way we build links?

It shifts the focus from raw power to Semantic Proximity. In a legacy strategy, any “high DR” link was considered good. In an entity-driven strategy, a link is a factual confirmation of your brand’s identity. A link from an authoritative site in your specific niche acts as a “node” that connects your brand entity to the industry’s “Seed Sites.” This makes the link significantly more effective at moving rankings than a generic placement.

Can you have a brand entity without a Google Knowledge Panel?

Yes. A Knowledge Panel is a visual confirmation of an entity, but the entity exists in the Knowledge Vault long before the panel appears. Many B2B brands and niche agencies have strong entity salience, meaning Google understands exactly who they are and what they do, without a public-facing panel. The goal of Entity SEO is the understanding, not just the visual box.

How does Entity SEO impact discovery in AI search engines like Perplexity or Gemini?

AI search engines (LLMs) do not just crawl pages; they predict relationships between concepts. They are far more likely to cite a brand that has a clearly defined entity profile supported by consistent data across the web. By using Entity SEO to build a “verified” identity, you increase the chances that an AI agent will recommend your services as a trusted solution to a user’s query.

How does Natural Language Processing (NLP) actually identify entities on a page?

NLP is the technology that allows Google to move beyond “reading” words to “understanding” context. It uses a process called salience scoring to determine how central an entity is to your content. By analyzing the relationship between nouns and verbs, and looking for Semantic Triples (Subject-Predicate-Object), NLP identifies the “facts” on your page. If your content is vague or lacks specific industry nouns, the NLP confidence score drops, and your entity remains “fuzzy” in the Knowledge Graph

Related Resources